Stevo wrote: Thu Jul 31, 2025 8:01 pm

They are magically bloated in size compared to natively built applications, though.

Being a very old geek who started out on Commodore Super PET then moved to PDP-11/70 running RSTS/E . . . having written some

geek books that won an award or two, please allow me to ramble a bit. You can get more background reading

this book, and

this enjoyable post quoting one of the founders of Agile.

DOS won

Flatpaks and AppImages are the Linux world's way of admitting DOS won without actually saying it. We face this a lot in the embedded systems world. I would even go so far as to throw Docker and the other "container" systems into the admission category as well.

The rest of this post will be a barefoot-in-the-snow-uphill-both-ways defense of the above so feel free to zone out.

We will skip over the RL removable pack days and their whopping 1.2 MEG storage capacity and start our conversation with the

RA60 and its just over 200 MB storage capacity where it could be formatted for either 16-bit or 18-bit words. The 11/70 could have around 2MB of physical RAM, but each running instance was limited to 64K-words. In that 64K-words your program, its data, and however much of the OS it needed had to exist. There were shared system libraries, but more importantly,

installed images. Instead of each user running a copy of the default editor, there was one copy installed in RAM and each user had their own "data area" for lack of a better term that held what they were doing.

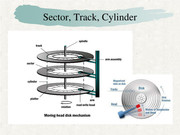

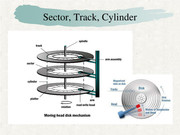

Both IBM and DEC standardized on 512 byte disk blocks. Later the PC world adopted the same. IBM, focusing on high volume, allocated storage in tracks and cylinders. They also called blocks "sectors."

DEC and many other midrange companies developed the concept of "paged memory" for their virtual memory operating systems. A vestige of this exists in Linux and is called SWAP. Be it a swap file or partition it is for paging things in and out of memory to make things better.

Because we were trying to run north of 64 users with 2MB of RAM we had to have shared libraries and installed images. UNIX, and later, Linux, copied this. In Dennis's defense, he was creating the C programming language and UNIX on a PDP, so he had the same resource restrictions.

Stupid decisions gave us the IBM PC and its 640K initial memory limit. In the case of IBM, the sales team determined more memory than that would cost close to a million dollars and nobody could afford it so they had the engineers allocate the 384K region above 640K for add-in cards like video. In the case of DEC, engineers built a Micro-PDP that could be sold for the same $5K price tag and would run all/most of the existing PDP software. Sales did not want that cheap thing cutting into the market for the $250K priced mid-range units making up the bulk of their commissions . . . You've never heard of it because they didn't release it. In Ken Olson's defense, DEC got into PCs with the

Rainbow at the tail end of the CP/M days. Took a beating there.

IBM also chose a processor which required SEGMENT:OFFSET addressing. Each segment was 64K bytes in size. DOS was originally limited to 10 working segments for everything. It could not swap. Hey, those 360K floppies were slow, noisy, grinding beasts anyway. The whopping 10MB full height hard drives weighing more than most bowling balls weren't quiet speed demons either. SEGMENT:OFFSET forced on the software development world the concept of

memory models. The chart from there is okay, just doesn't tell the whole story. In fact, he has small and compact reversed.

Compact - the .com extension - usually assembler language written and specially linked. Both executable and data had to fit in a single segment.

Small - one segment for each code and data

Medium - multiple code segments only one data segment

Large - multiples of each

Many a programmer got burned by OFFSET when trying to increment a pointer when the comparison only compared OFFSET because OFFSET went back to zero when you cross a segment boundary.

Anyway, DOS (Disk Operating System) didn't do much. Yes, we had external commands like EDLIN, FORMAT, PRINT, etc. but the OS didn't do much. It was COMMAND.COM and fit in 64K bytes. Once loaded it stayed there forever. Programs did most everything via

INT 21h. We communicated directly with the BIOS of the machine. Very slow burnt EPROM (later flashable) code built into the motherboard.

Yes, this was a long journey, but you needed to walk it to appreciate the rest.

Many early computers were sold with a single floppy drive because computers were expensive. When you wanted to copy a file from one floppy to another DOS would read a chunk, tell you to swap floppies, write a chunk, tell you to swap floppies . . .

Because of poor decisions

DOS executables had to have everything linked into them. In the Linux world we call it static linking. The upside was that most programs were immune to operating system changes. It wasn't until the era of DOS Extenders like HIGHMEM (sp?) and the rest that we had much interaction with the OS.

On the midrange and mainframe platforms, we had computer operators, data centers, and system managers. Any operating system changes were tested

before they went into production. Despite all of our programs interacting with the OS, we were insulated from it.

Contrast that to what we have with Windows and Linux today.

- Use of Agile instead of actual Software Engineering.

- Automated test scripts that often test nothing.

- Updates force fed to computers.

- Users have a working system when they go to bed and find something busted the next day

Gotta love being old enough to remember when Ubuntu pushed out a networking "update" that was obviously never tested. It deleted support for Broadcomm (sp?) network chips that were in something like 70-90% of all laptops at the time. People with only one computer were left twisting in the breeze.

KDE is notorious for pushing updates out to whatever that central PIM database engine is that have incompatible changes to content which is inexplicably stored in .cache and they never clear .cache as part of the update. Whole systems hang, not just the email, contacts, etc.

Flatpak and AppImage are a desperate attempt to minimize the damage caused by OS updates that shouldn't have been.

Docker and other container systems minimize the damage but also exist to nurse along programming architectures that are as current as the floppy disks and single core computers they were created on.

In the days of DOS we had to roll our own for everything. Then GUI DOS (Windows) came out. Despite the fact AUTOEXEC.BAT had "win" at the end of it and when you exited Windows you were right back at the C:> prompt Microsoft called it an operating system.

With DOS we had interrupt driven programming.

About the time Windows came out we had a lot of TUI and graphical libraries that introduced event driven programming.

Modern user interfaces and many embedded systems are programmed in immediate mode.

Best example for interrupt driven is modem or serial communications. When the UART assembled an entire byte it fired a DOS interrupt (usually 3 or 4 if memory serves). Your program would have hooked the interrupt to an interrupt service routine that would immediately read the byte and put it somewhere. Everything was interrupts, even key presses on the keyboard and things sent to the display.

Even driven programming, no matter who created it, had a single main event loop. Basically everything would put messages on an event queue and the main event loop would process them as it got to them. The 80286 was a single core computer and not all that fast (by today's standards) so this bottleneck was viewed as a great programming paradigm. There were switch statements in the main event loop ( or one function it called) that were thousands of lines long. Eventually C++ helped hide a lot of that. Application frameworks like Qt and others added the concept of signals and slots. Oh, they still had a main event loop and many signals could be queued on it, but a signal could also direct execute a function in a different class that had been declared a slot.

Enter the world of multi-core computers.

This event loop really has to execute on one core. Most everything it executes also executes on that same core. Trouble is you have 1-N additional cores hurling things onto its event queue. Anyone who has ever been in a vehicle where the lanes neck down from 4 or more to 1 knows what happens here.

So, before I cure insomnia for anyone who got this far.

Too many programmers aren't formally trained which means they don't consider the big picture when developing software. Microsoft's bloatware is legendary and they tell you to throw hardware at it rather than them fixing their code. Tons of features/capabilities get added to a single executable with one massively overworked main event loop.

Add onto that decades of being told you had to use shared libraries, to the point, much of what you need to use cannot be statically linked. (Think Chrome and other Web browser libraries.) The shared libraries then kick the 3-legged stool out from under your application when an untested update gets forced out.

Speaking as one who has had to do packaging, the final humiliating kick to the crotch is the fact there are something like 20 different package managers. While .deb kind-of plays nice on distros claiming to be Debian based, .rpm created on Fedora or whatever will not be compatible with all RPM based distributions. There are some that have "improved" the RPM format. AUR is taking the approach of making everyone build everything from source, fine, but you still have to know the correct system install directories. This gets really kinky when you have systems with multi-arch support. There are no solid rules for placement of architecture/platform specific header, source, executable, and shared library files.

Flatpak and Appimage try to minimize the interaction with the actual host operating system.

Docker and the other container systems are an attempt to nurse along the main event loop while getting back to DOS. Docker doesn't do much for you as you build your own little world inside of the container. If you build in some form of cross container communications each container can theoretically run on their own core thus distributing load. As long as nobody updates the actual Docker software on the host you should be better insulated from host update catastrophes. Having said that, if insert-distro-here pushes out an untested network software update that drops support for your network chipset, you are hosed.

Short answer

Linux (all flavors) has now achieved parity with Windows - - - Just throw hardware at it

Both DOS and Linux used to run from a single floppy.

Some distros still do, but do you still have a floppy drive? Full disclosure,

I still have multiple LS-120 drives.

This rambling post should have cured everyone's insomnia!